By the time President Obama announced the Precision Medicine Initiative in January 2015, the Genetic Epidemiology Research on Adult Health and Aging (GERA) cohort was already a trailblazing example of this new approach to medical research.

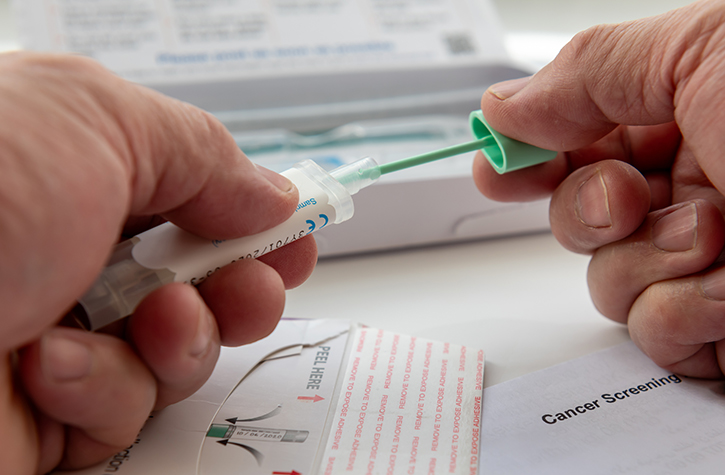

GERA is a group of more than 100,000 members of the Kaiser Permanente Medical Care Plan who consented to anonymously share data from their medical records with researchers, along with answers to survey questions on their behavior and background. Participants also shared their DNA—via saliva samples—to help with the project.

The result is a treasure trove of data, says GERA co-principal investigator Neil Risch (University of California, San Francisco). The study links genotype data from the saliva to environmental and lifestyle data from the surveys to clinical, pharmacy, imaging, and diagnostic laboratory data from electronic medical records—all derived from a large, ethnically-diverse population.

GERA was formed in 2009 by a collaboration between the Kaiser Permanente Northern California Research Program on Genes, Environment, and Health (RPGEH) and the Institute for Human Genetics at UCSF, and is led by Risch and RPGEH Executive Director Catherine Schaefer.

Today, in a series of three papers published Early Online in GENETICS, the research team formally describes the GERA resource, including the population structure and genetic ancestry of the participants, telomere length analysis, and details of the innovative methods that allowed them to perform the genotyping within 14 months.

Genes to Genomes spoke with Dr. Risch about GERA and the team’s research:

What makes the GERA data so useful?

In my view, it’s Kaiser’s incredibly comprehensive electronic health record system. They’ve been way ahead of the game. The records include pharmacy records, what procedures were performed, scans, lab tests, you name it, it’s all there. Really, the only thing missing is dental. And it all goes back twenty years to 1995. Kaiser places a big emphasis on prevention, so there are lots of screening results that greatly enhance the information on risk factors. Once you attach genetic information to data like this, it enables analysis of so many different phenotypes.

And it’s not just genetics. From their survey responses we learn about patients’ behavior and lifestyle, and from their addresses we can infer all kinds of things about their risk exposures, air quality, water quality, social environment, built environment, income, etc.

Historically, the way we’ve done genetic studies is to start from scratch. We would recruit a study population, collect all the information, and measure a few things—like disease status or biomarkers—at one specific point in time.

But when we instead use data that is routinely collected as part of care, we have a much richer dataset, often over many time points. For example, I’m interested in lipids. You might think if we have a cohort of 100,000 people, that translates to 100,000 lipid panels. In fact it’s 1.1 million because the average person in the cohort has records from 11 lipid panels. That means we can look at changes over time with age.

Because these records are also linked to the pharmacy database, we also know what each of those people has been prescribed. So we’ve been able to analyze how people’s LDL cholesterol levels change after they start taking statins. And then we can look at side effects and so on. We don’t have to create a proposal for each of those questions, we just go back to the database.

And better still, a cohort like this only gets more valuable over time, because the records get updated every night. That means we can now do prospective, rather than retrospective analysis

Skeptics thought electronic health data would turn out to be less reliable than targeted measurements, but that’s wrong. Over and over again, we’ve validated that electronic records are actually fantastic for these kinds of studies. In fact, I see this as a phase shift in the way genomics research will be done.

What findings has the GERA data yielded so far?

We have findings for prostate cancer, allergies, glaucoma, macular degeneration, high cholesterol, blood pressure—and those are only a few examples. It’s not just diseases either. For example, we have the results of PSA tests [prostate specific antigen screening tests for prostate cancer risk]. So we were able to find up to 30 novel variants that influence PSA levels.

The beauty of this resource is that no matter what phenotype we look at, we find associations—everything we touch! These are subtle effects, but in this cohort, if they exist, you’re going to find them. Even though people complain that the risks detected by GWAS are modest, I argue that this simply reflects reality—not everything is a Mendelian disorder. Model organism geneticists have known this for years: these traits are polygenic and there are many genes involved.

What did you learn from the population structure analysis?

Traditionally there’s been a bias in research participation from people with Northern European ancestry. To make up for that bias, we had a mandate to maximize minority representation when we selected participants. In the end, around 20% of the cohort were from a minority ethnicity/race/nationality.

We were particularly interested in people who checked more than one box on the ethnicity questionnaire. More and more people are identifying as multi-ethnic, which can pose some technical challenges for genomic studies in terms of complexity. At the same time, it also presents opportunities for analyzing genetic and social contributions to disease differences between groups.

Yambazi Banda (UCSF), first author of the population structure paper, is very interested in the relationship between genetic ancestry and how people self-identify. We found that the relationship is very strong, and the way people describe their backgrounds generally matches their genetics.

One interesting aspect of the data is that we ended up with related individuals among the cohort, including around 2,000 pairs of full siblings. That meant we could tell whether these siblings described their ethnicity in the same way as each other. Most did, but those who reported different ethnicity from their siblings tended to be multi-ethnic. Multi-ethnic people also tended to be younger, which probably reflects social changes and increased intermarriage across racial and ethnic boundaries.

How and why did you genotype the samples so quickly?

Around 2008 we had 85,000 saliva specimens and consent to use them, but we needed funding. This was around the economic recession, and, it turns out, when Arlen Specter and Congress pushed for 10 billion dollars in extra funding for the NIH, as part of the economic stimulus package. We received Grand Opportunity Project funding from the National Institute for Aging (NIA) because the average age of the cohort was 63, and the NIA was interested in funding genomic analyses of age-related diseases. But we needed to finish the work in two years, or just 14 months in the lab.

In 2009, it was a big deal to do something like this so fast. We were under the gun to get this data, with assays running 24/7. Thankfully we had a lot of hands-on help from Affymetrix [manufacturers of the genotyping chips]. And Mark Kvale, our lead scientific programmer, and postdoc Stephanie Hesselson, and Pui-Yan Kwok, who directs the genomics core, did a huge amount of work to make the project a success.

Part of our solution to the time crunch was developing real-time turnaround in the data analysis. So within three hours after the results came out of the GeneTitan [the genotyping array processing stations], we knew if anything was going wrong. Working in this way probably saved us hundreds of thousands of dollars.

We also improved the way the genotypes were called [inferred], realizing that Affymetrix’s historical method was suboptimal for rare variants. The upshot is that Affymetrix has since changed its protocol and has used a lot of the lessons that we learned with the GERA project to benefit other very large genotyping projects using the same platform—for example, the Million Veterans Program.

It wasn’t just genotyping either. Liz Blackburn and her group were assaying telomere length in all the samples at the same time [Blackburn is a UCSF geneticist and won a Nobel prize for the discovery of telomeres]. No one had done anything with telomeres on this scale before. The first author on the telomere paper, Kyle Lapham, had to create a robotic system for these very tricky experiments. In the end it only took four months to do the assays. It’s quite an achievement!

The results confirmed that the data is sound—for example, we see that telomeres get shorter with age as expected. We also observed a sex difference, where women tend to have longer telomeres than men.

Remarkably, there was some evidence that telomere length is related to survival. For those under 75, younger people tend to have the longer telomeres; But for the over 75s, there’s a reversal; the oldest people tend to have the longer telomeres.

What’s next for GERA?

We’re working on publishing more of the results; there are so many phenotypes that are just begging for analysis! At this stage we’re operating largely as a resource for other scientists. Researchers can apply for data access via Kaiser Permanente [the Kaiser Permanente Northern California Research Program on Genes, Environment, and Health] or via NIH’s database dbGap.

The field is moving away from SNP genotyping and in the direction of sequencing, with the rationale that the SNP arrays don’t provide good coverage of rare variants. But in reality the amount of information you get from these arrays is vastly more than just the several hundred thousand sites on the array because you can impute the genotypes at other sites by using reference sequence panels.

Tom Hoffmann (UCSF), who helped design the GERA genotyping arrays, has done a lot of work on imputation in this cohort. For example, we’ve published analyses on a rare mutation in HOXB13that causes prostate cancer. The carrier frequency in people with Northern European ancestry is only about 0.3%, but given we have 100,000 people in the cohort, we expect carriers among them. But how do we find them? That particular variant was not included on the SNP arrays.

We found we could identify carriers relatively well by imputing genotypes at the mutation site using reference sequence panels and the genotypes of surrounding SNPs. The beauty is, once we had identified those carriers, the health records allowed us to look at not only prostate cancer, but at all cancers. Sure enough, we showed that in fact this mutation is a risk factor for a lot of other cancers.

Using imputation, I believe it’s very realistic that the GERA cohort will end up with good coverage of variants with frequencies of around one in a thousand. That means we’ll have data on up to 50 million variants, rather than just the several hundred thousand on the array.

As you can tell, I’m enthusiastic about this project! At the beginning of a big project like this, you really don’t know it’s going to work. It’s gratifying that after such a major investment of time and effort, we ended up with a resource that is so valuable and exciting.

CITATIONS:

- Characterizing race/ethnicity and genetic ancestry for 100,000 subjects in the Genetic Epidemiology Research on Adult Health and Aging (GERA) cohort

- Genotyping Informatics and Quality Control for 100,000 Subjects in the Genetic Epidemiology Research on Adult Health and Aging (GERA) Cohort

- Automated assay of telomere length measurement and informatics for 100,000 subjects in the Genetic Epidemiology Research on Adult Health and Aging (GERA) Cohort.

This article was originally published in Genes to Genomes, a blog of the Genetics Society of America, on June 23, 2015.